In an era where cyber threats pose significant risks to national security, the military embraces trusted computing to safeguard its technology systems. This approach involves rigorous security to ensure the integrity, confidentiality, and reliability of computing environments used in the military.

With trusted computing, U.S. military leaders aim to protect sensitive information and maintain operational security against increasingly sophisticated cyber attacks.

Hardware-based security

Trusted computing in military technology systems begins with hardware-based security. This involves the use of secure hardware components, such as Trusted Platform Modules (TPMs), which provide a root of trust. These components are capable of securely storing cryptographic keys, passwords, and other critical data, and form the foundation of a secure computing environment.

Richard Jaenicke, director of marketing at Green Hills Software in Santa Barbara, Calif., explains that the U.S. Department of Defense (DOD) is driving the development of a unified alignment between government and industry stake holders on “zero trust.”

The concept of zero trust fundamentally changes the approach to network security. Unlike traditional models that assume everything inside the network is trustworthy, zero trust operates on the principle that no entity -- whether inside or outside the network -- should be trusted automatically.

“In November 2022, the Zero Trust Portfolio Management Office (ZT PfMO) within the DOD chief information office (CIO) released the DOD Zero Trust Strategy and Roadmap,” Jaenicke points out. “That roadmap includes the intent to implement almost a hundred different zero trust capabilities and activities by 2027. However, the director of the ZT PfMO, Randy Resnick, has said his office received pushback on its aggressive goals for meeting zero trust and saw a need to codify its role in the government-wide race toward network security. To that end, he said we should expect a ‘directive type’ memo that will give his office more authority to put pressure on the Defense Department to meet cybersecurity deadlines.”

Although zero trust mostly is associated with enterprise systems, Jaenicke says, it also is applicable to embedded computing systems in the field. “Most deployed embedded systems implement perimeter-based security, often as simple as a user ID/password combination granting broad admin-level privileges to change the configuration and security posture of the system,Jaenicke says. "Implementing zero trust starts with assuming that those perimeter defenses can be breached and not implicitly trusting any application already inside the perimeter.”

A firm base

This zero-trust approach has changed the thinking on what vulnerabilities are front-of-mind for the DOD, says Massimiliano De Otto, senior field application engineer at Wind River Systems in Alameda, Calif.

“In the military sector, major threats come from DoS [denial of service] attacks and the compromising of a system by injecting malware. There has been a change in perspective over time,” Wind River’s De Otto says. “For example, in the past, people working on these systems were often considered trusted. This is not the typical scenario any longer. The introduction of zero-trust architecture in the infrastructure, more stringent secure coding standards, and continuous monitoring of deployed devices in the field are common practices. While this may be challenging in some specific areas, it has often become necessary to prevent or mitigate bigger problems.”

Another key aspect is secure boot, a process that ensures the system only boots using software that is trusted and verified. This mechanism prevents malicious software from being loaded during the startup process, thereby maintaining the integrity of the system from the outset.

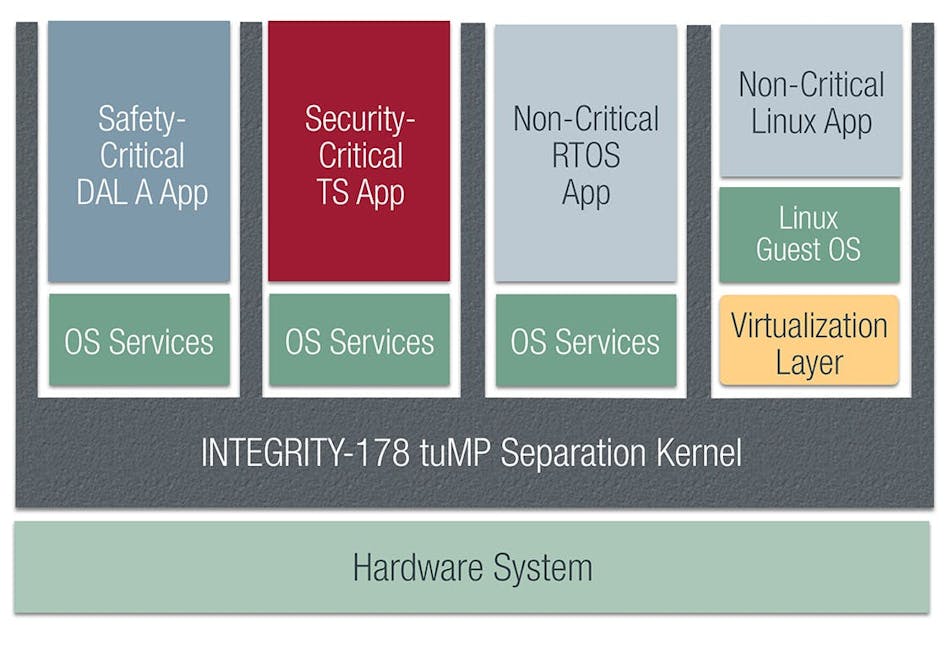

“The most secure embedded systems use a hardened separation kernel to isolate applications, providing a base for zero trust,” says Green Hills’s Jaenicke. “Each application is isolated in a partition such that any breach or malware in one partition is guaranteed not to migrate or affect an application in any other partition. A separation kernel limits access to the least privilege necessary to get the job done. It also follows a policy of ‘deny by default,’ allowing only pre-approved information flow between partitions as defined in a static configuration file. Separation kernels have a very small attack surface and can be small enough to enable security evaluation of each line of code.”

Jaenicke says that Green Hills’s INTEGRITY-178 separation kernel has a formal proof of correctness. “The INTEGRITY-178 tuMP secure RTOS runs on a variety of ARM cores, including the Cortex-A53 found in many FPGAs, and has available security life-cycle evidence to support system certification. INTEGRITY-178 tuMP is designed to Common Criteria EAL 6+ and NSA defined “high robustness” for resiliency against threats from well-funded and determined actors such as hostile nation-states.”

Airborne approach

De Otto from Wind River notes a convergence between traditional trusted computing and conventional computing.

“In other words, there is more awareness that cyber threats can happen everywhere and that the impact caused by them can be catastrophic. As a result, almost any new design in the military sector must consider security aspects,” De Otto says. “This can be disruptive in specific areas, such as avionics since it is mandatory to adopt security strategies following the ED-20x/DO-3xx set of documents issued by [civil regulators] EuroCAE and FAA. New processes must be put in place, and different skills and mindsets need to cooperate.”

While avionics designers are avoiding unproven hardware in complex CPUs in high design assurance levels (DALs), Jaenicke says, this approach is being embraced for high security computing outside of airborne electronics.

“The reason for avoiding such so-called ‘novel’ hardware is the difficulty in proving the assurance level and integrity of those mechanisms, Jaenicke explains. "An example of a well-understood and proven CPU mechanism is the memory management unit (MMU), which is a cornerstone of safety and security that partitions memory address space. On the other hand, an example of a novel hardware mechanism is single root I/O virtualization (SR-IOV). SR-IOV is a hardware-based virtualization solution that allows one physical PCI Express device to be used by multiple virtual machines simultaneously without the mediation from the hypervisor, thus significantly decreasing the overhead of I/O virtualization.

Jaenicke continues, “However, the safety and security of SR-IOV hardware have not been shown. To the contrary, several Critical Vulnerabilities and Exposures (CVEs) have been filed related to SR-IOV, and the NSA has banned SR-IOV from use in cross domain systems (CDS), which protect National Security Systems (NSS). Some hypervisors require SR-IOV to operate, so software developers should be careful to choose a virtualization solution that does not. For example, the virtual machine monitor (VMM) for the INTEGRITY-178 tuMP RTOS does not use SR-IOV.”

Looking back

With zero trust joining most modern technology, there still are concerns with safety and security in legacy military systems, in which security problems remain difficult to spot because of the proprietary software employed, De Otto says. Because of the proprietary nature of some legacy systems, issues may not be listed on standard knowledge repositories like the National Vulnerability Database.

“However, in general, it is still possible to use the practices adopted for current developments: using vulnerability scanners and performing static analysis of all the code or adopting threat analysis methods - such as MITRE,” De Otto says. “Difficulties arise when legacy code is written in languages that are different than C, C++ or Ada, such as FORTRAN. Manual analysis is required in that case and that might be impractical, time-consuming, or too expensive. Mitigation can be also challenging, since mechanisms to allow on-line updates, or updates in general, might not be available. A general strategy for a legacy system is difficult. They typically must be addressed on a case-by-case basis.”

Eyes on AI

As industry looks for ways to harness the potential of machine learning (ML) and artificial intelligence (AI), secure systems used by commercial aviation and military branches will be later to adopting both until their relative safety can be assured.

“The trend of AI is growing, and it is already being used in software development, as in the case of security scanners adopt AI to analyze and identify potentially misbehaving code,” says De Otto at Wind River. “However, AI is currently scarce in deployed systems, because there are many concerns about the security of AI itself, and there are challenges in terms of protecting AI itself from external attacks. This is a work in progress and as AI’s role continues to grow, it will be important to use it within the context of proper regulations.”

Jaenicke at Green Hills says that ML “excels at pattern matching and can improve the efficiency and effectiveness of intrusion detection and response based on predictive variations of known attacks. Generative Artificial Intelligence (GenAI) can be used to speed decision-making when intrusions are detected.

“A big challenge with intrusion detection is keeping up with the data flow for 100 Gigabit Ethernet. FPGAs are ideal for this type of high-performance, real-time computation, which involves identifying multiple patterns in each of tens of thousands of intrusion signatures. The security of the intrusion detection system itself also is a key consideration. Luckily, modern FPGAs are the most secure type of programmable hardware, often being used to enhance secure boot capability for CPU systems. Having a secure operating system running on the FPGA’s processor cores adds another layer of security assurance.”

Green Hills’ Jaenicke says that while GenAI still is in its early developmental stages, it shows potential for offering insights to security analysts regarding the type and extent of detected threats and recommended responses. Although the large models supporting GenAI are trained on GPUs, high-performance CPUs can be a more cost-efficient option for running the inference engine, especially for specialized models. Securing these inference engines is challenging due to the large attack surface of both the hardware and software involved.

Comments

Post a Comment